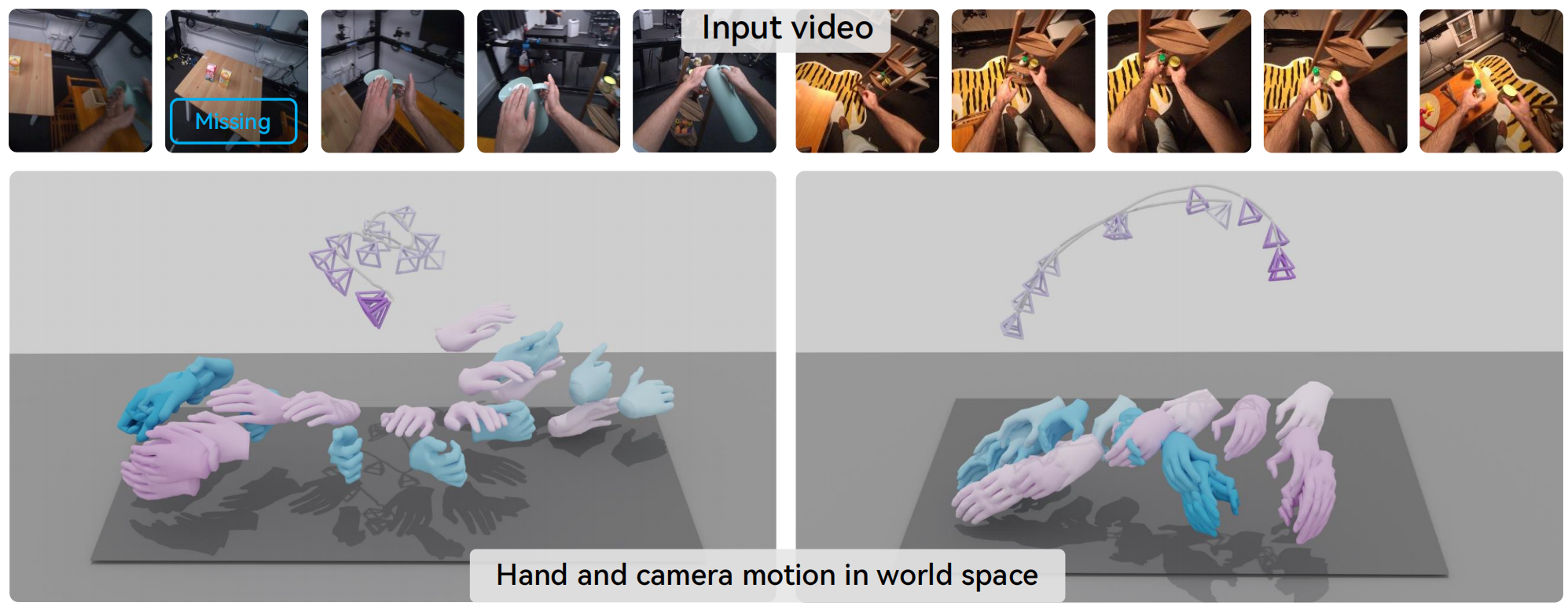

Despite the advent in 3D hand pose estimation, current methods predominantly focus on single-image 3D hand reconstruction in the camera frame, overlooking the world-space motion of the hands. Such limitation prohibits their direct use in egocentric video settings, where hands and camera are continuously in motion. In this work, we propose HaWoR, a high-fidelity method for hand motion reconstruction in world coordinates from egocentric videos. We propose to decouple the task by reconstructing the hand motion in the camera space and estimating the camera trajectory in the world coordinate system. To achieve precise camera trajectory estimation, we propose an adaptive egocentric SLAM framework that addresses the shortcomings of traditional SLAM methods, providing robust performance under challenging camera dynamics. To ensure robust hand motion trajectories, even when the hands move out of view frustum, we devise a novel motion infiller network that effectively completes the missing frames of the sequence. Through extensive quantitative and qualitative evaluations, we demonstrate that HaWoR achieves state-of-the-art performance on both hand motion reconstruction and world-frame camera trajectory estimation under different egocentric benchmark datasets.

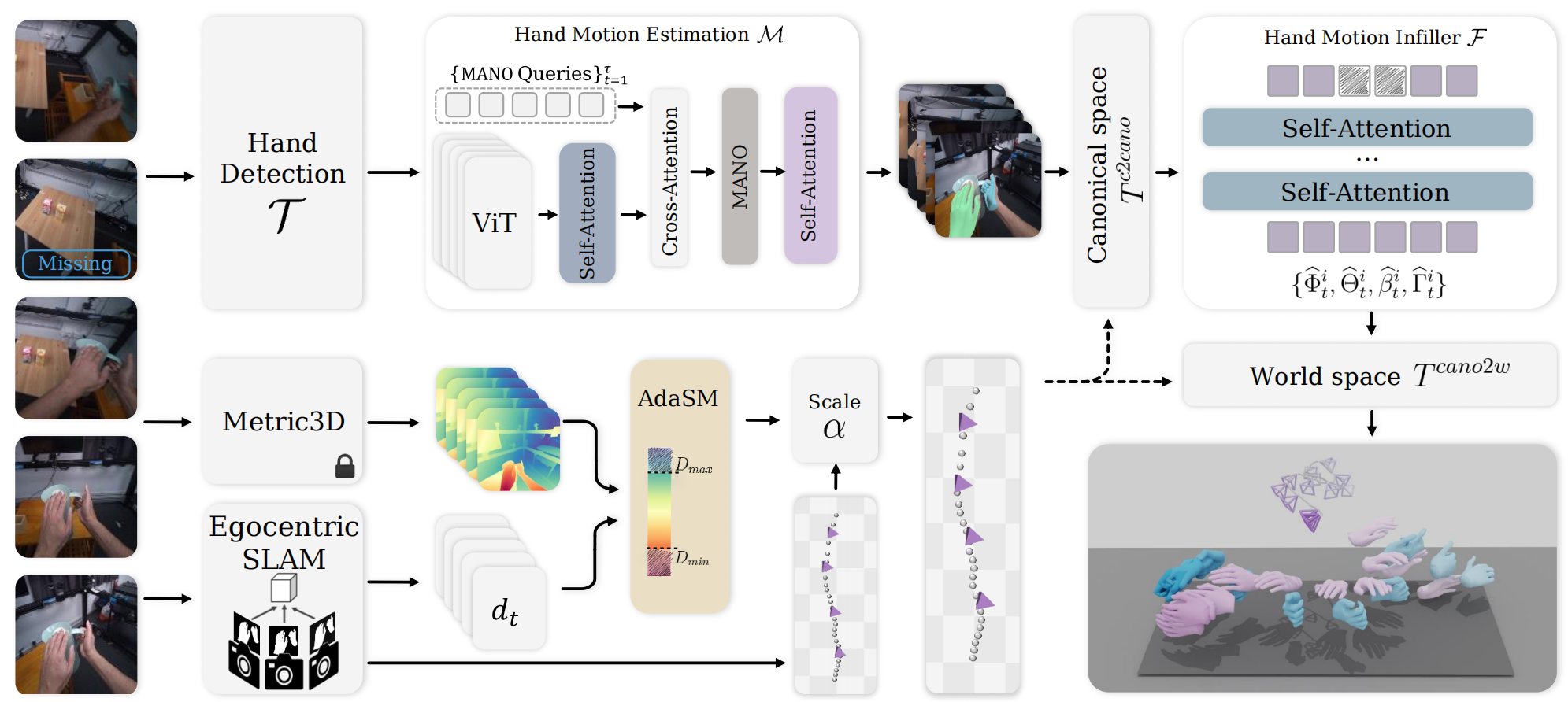

HaWoR is a high-fidelity reconstruction framework for hand motion reconstruction in world coordinates from egocentric videos. We propose to decouple the task by reconstructing the hand motion in the camera space and estimating the camera trajectory in the world coordinate system.

Given an egocentric video \( \mathbf{V} \) with a set of detected hands from an off-the-shelf detector, we utilize a large-scale transformer-based module with two levels of data-driven motion priors to reconstruct the 3D hand motions in the camera frame. To reconstruct hand movements beyond the view frustum, we introduce a novel hand motion infiller network designed to complete the missing frames in the hand motion sequence. We estimate world-space camera trajectories using an adaptive egocentric SLAM module that is accompanied by a foundation metric model to accurately align the SLAM reconstructions to the world-coordinates.

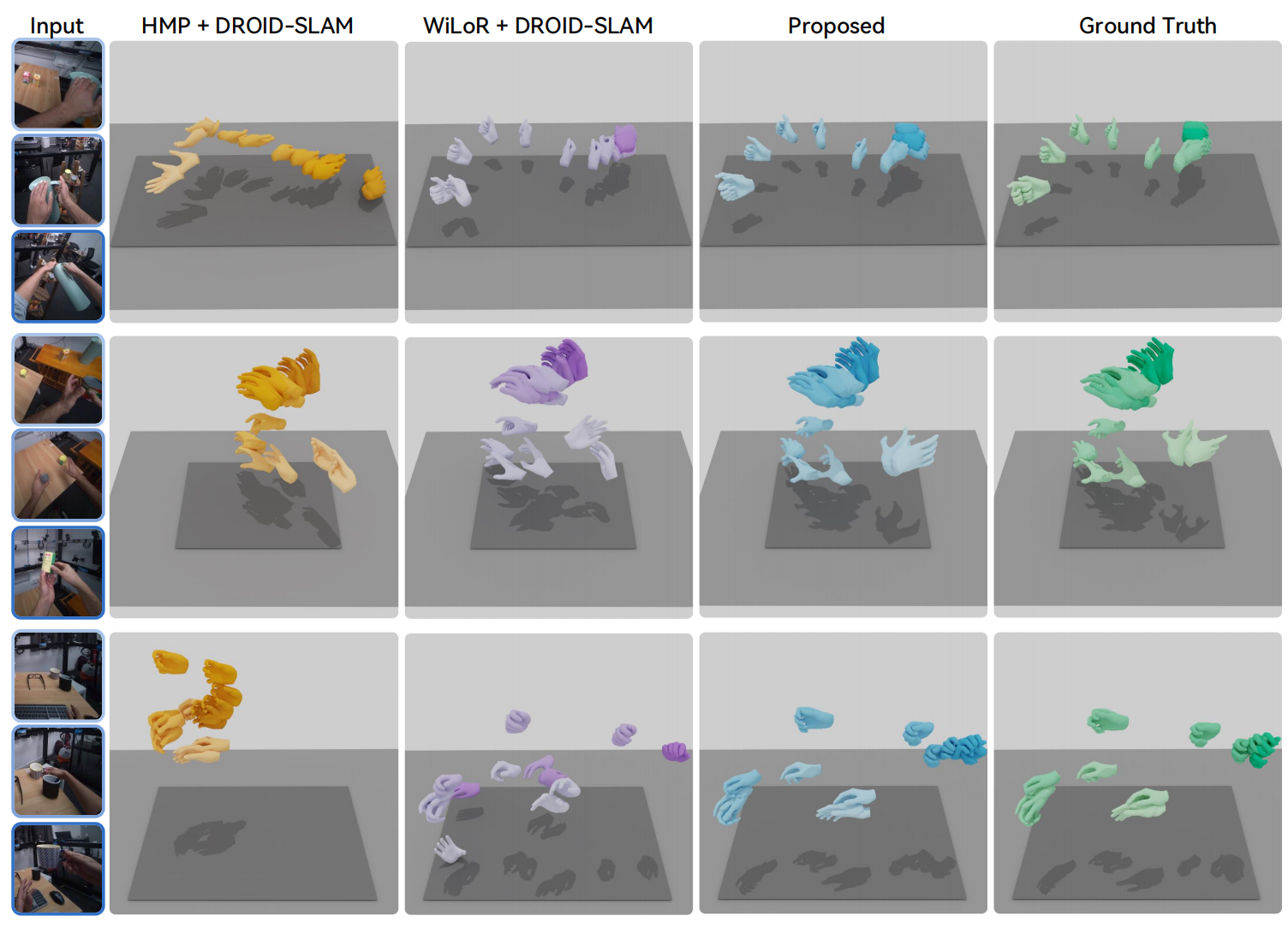

HaWoR significantly advances state-of-the-art 3D reconstruction in the world-coordinates, outperforming previous methods on HOT3D datasets.

@article{zhang2025hawor,

title={HaWoR: World-Space Hand Motion Reconstruction from Egocentric Videos},

author={Zhang, Jinglei and Deng, Jiankang and Ma, Chao and Potamias, Rolandos Alexandros},

journal={arXiv preprint arXiv:2501.02973},

year={2025}

}